A Tale of Compilers and Languages

Editor’s note: In Fall 2021, I published my PhD dissertation

“Proof-oriented domain-specific language design for high-assurance software”,

and proceeded to resolutely forget all about it after the defense, as it is

customary. Indeed, I had spend a few busy months writing the introduction

of the dissertation that binds all of my contributions together, and after

so many edits and correction passes I could not stand to look at it anymore.

Among those edits, I had written a full 10-pages at the very beginning of the

dissertation as a general introduction and motivation as to why I had chosen

to engage with formal methods, coming from a compiler engineering background.

Following the good advice of my PhD advisors, I decided to cut it from the already

too long manuscript (300 pages) as it was not directly related to the main thesis

of the dissertation. So, this text was let to rot in a orphan background.tex

file on my computer.

However in the last weeks, I was honored to learn that my dissertation had been singled out to receive the Gilles Kahn prize of the best computer science French dissertation of the year. Flattered by the perspective that at least one person on the jury had read my dissertation and enjoyed it, I realized that other people could also appreciate the bit that didn’t make it into the final piece. Hence, you will find below the full text of the “background” introduction to my dissertation. if you like it, I suggest you continue your reading with Chapter 1 of my dissertation, and thus experience a true director’s cut of this pensum about proof-oriented, domain-specific language design for high-assurance software. Be aware that this text was written in the summer of 2021 and may be somewhat outdated in its assessments and references.

As noted by Terry Winograd in his 1979 seminal paper (Winograd 1979), the software industry has always been confronted to the problem of safely and efficiently connecting together programs that live in different ecosystems. Winograd then suggests that moving to higher-level, declarative, logical but executable description of systems’ behaviors might be the solution to this problem. We generally agree with this vision, although instead of advocating to go “beyond programming languages”, we rather bet on higher-level, safer, more descriptive programming languages that interact together thanks to advanced compilation techniques. In this sub-section, we examine whether this vision has been achieved, and focus on the recent evolution of two high-profile projects with a complicated relationship: LLVM and Rust.

Compilers As Critical Infrastructure

Forty years later, one can observe that the achievement of Winograd’s vision is mitigated. On the one hand, the move towards higher-level programming languages is being confirmed, with the large adoption of automatic memory management and object-oriented programming in the industry. Moreover, functional programming and strong type systems are making their entry in the mainstream, as algebraic data types (Bucher and Rossum 2020) and inline lambdas (Järvi and Freeman 2010; Mazinanian et al. 2017) are inspiring new features in many established languages.

On the other hand, the problem of efficiently connecting large systems together has led to a de facto hegemony of flagship programming languages with a unifying ecosystem: C and C++ for the systems and low-level world, Javascript for the Web, etc. Central pieces of these ecosystems, the compilers have received an unprecedented amount of attention from the communities, with the simple premise that it is cheaper to pay one qualified compiler engineer to increase the performance of all programs than 1%, than pay a thousand software engineers to rewrite all the programs in a more efficient way.

With the advent of the open-source philosophy in the 90s and 2000s, the compiler market consolidated into a oligopoly of a handful leading projects per language, an event that is consistent with the infrastructure business model of this piece of software. In the C and C++ world, GCC and LLVM embody two different generations of compiler engineers, the second being closer to the academic contributions to the field. In the Javascript world, the ongoing competition between Firefox’s SpiderMonkey and Chrome’s V8 has led to an outstanding development of Just-In-Time (JIT) compilation methods and their results.

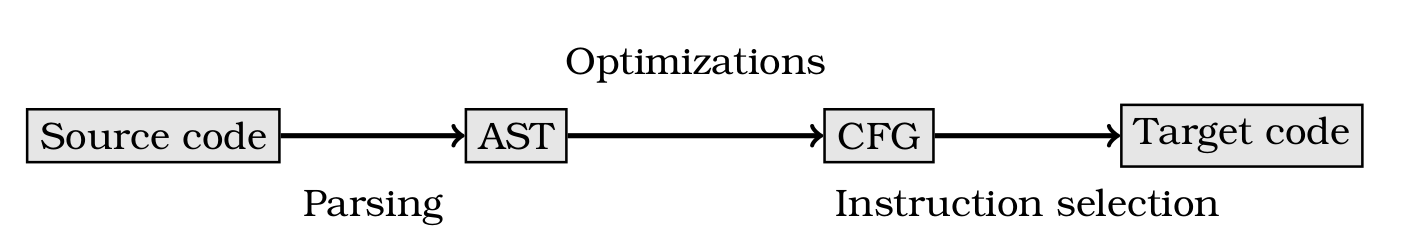

As a reminder, the different phases of the compilation process are illustrated in Figure 1. First, the compiler parses the text of the source code into an Abstract Syntax Tree (AST), tree-shaped data structure that represents the whole program being compiled. Compiler optimizations will then transform that AST in place to produce a new, smaller or more performant program that behaves identically as the old one. Compilers also often translate the AST at some point to a Control Flow Graph (CFG) representation, where the vertices are the program atomic instructions and the edges indicate how control passes from one instruction to another. This graph-based representation is useful for many optimizations. Finally, the compiler outputs the program in the target language, usually relying on some form of instruction selection to fill the gap between the concepts of the source and target language. The main contributions of the heavy compiler infrastructure cited above concern code optimizations, that improve the performance of the code generated by code compiler without altering the source. These contributions can be categorized as follows.

Improvement of register allocation. A central piece of the compilation process is register allocation, which maps the high-level notion of program variables (whose number can be arbitrary high) to machine hardware registers (whose number is fixed). While the general principle of register allocation falls into either graph coloring (Chaitin et al. 1981) or linear scan (Poletto and Sarkar 1999), the algorithm needs to be fined-tuned to the specific Instruction Set Architecture (ISA, the language of the processor) to exhibit better performance, and relies on manually-crafted heuristics that have been refined over time. The critical property of source programs to consider for this task is variable lifetime: the more intricate the lifetimes are, the harder it is to allocate variables to different registers without conflicts. Failure to produce good register allocation leads to variable spilling, i.e. the use of stack memory to store temporary values. Spilling can hugely affect performance since the hardware time to fetch a value in RAM is commonly assessed to be two orders of magnitude higher than the time to fetch a value in a register.

Memory-related code optimizations. The high cost of fetching values in RAM mentioned just above has justified a frantic research for compiler optimizations that prevented superfluous memory accesses. These optimizations mostly rely on an analysis of aliasing throughout the various pointers manipulated by the programs (Ghiya and Hendren 1998; Das et al. 2001). As the aliasing heavily depends on the control-flow and data-flow of the program, it is impossible to produce an exact analysis in reasonable time; rather, compiler engineers rely on fine-tuned heuristics. An other memory-related area of compiler optimization is data locality. Indeed, memory accesses in the same zone in the same time period are sped up by the hardware cache system. Then, it becomes interesting to optimize the data structure layout (Carr, McKinley, and Tseng 1994) or to reorder memory accesses (Pike 2002). The latter is made more difficult by the complex semantics of the weak memory model provided by the hardware (Kang et al. 2017) in the case of concurrent computing.

Improvement of instruction selection. Orthogonally to improving how the program accesses memory, it is also the compiler’s job to select the ISA-specific instructions that will perform a high-level, language-specific instruction efficiently. This task is especially critical on modern processors following the Complex Instruction Set Computer (CISC) philosophy, since there are many ways to perform the same computations but some are faster than others depending on the circumstances. Again, writing a good instruction selection pass requires a lot of manual heuristics and high expertise of the hardware ISA. A more theoretical approach involves modeling instruction selection as a tree or graph covering problem (Blindell 2016; Ebner et al. 2008); using this method yields significant performance improvements for the generated code but comes at the cost of using external solvers for this NP-complete problem. Inside mainstream compilers, such external solvers calls are usually disregarded because of their prohibitive compilation time cost.

Control-flow and data-flow-related code optimizations. This class of optimizations is the most general-purpose and common that can be found in almost all compilers. By representing the program as a graph data structure like a Control Flow Graph or a Sea of Nodes (Demange, Fernández de Retana, and Pichardie 2018), the compiler can reason about the way control and data flow throughout the atomic instructions. This kind of program analysis can detect unused values, loop-independent instructions, common sub-expressions, etc. The list of those optimizations is long: Global Value Numbering (GVN) (Click 1995), Partial Redudancy Elimination (PRE) (Briggs and Cooper 1994), Common Sub-expression Elimination (CSE) (Cocke 1970), Dead Code Elimination (DCE) (Knoop, Rüthing, and Steffen 1994), etc. Those optimizations, contrary to the previous ones, are quite principled and do not feature as many heuristics or ISA-specific quirks. Instead, the challenge is to implement them efficiently so as to be able to run them liberally at various phases of the compilation process.

High-level invariant-based optimizations. All of the previous optimizations imply rather local-scoped changes to the source of the program being compiled. However, it is possible to benefit from better performance gains if one is willing to restructure more broadly the source program. For instance, consider nested loops computations, which are at the core of scientific computing and especially matrix-multiplication-related programs. Reordering the loops and their indexes using polyhedral models (Benabderrahmane et al. 2010; Grosser et al. 2011) can unlock better memory locality, early branch exits, etc. Loop-related optimizations are also critical to benefit from the newest hardware performance-boosting features like Same Instruction, Multiple Data (SIMD) (Hohenauer et al. 2009).

Improvement of error and warning reporting to the user. An often under-considered area of compiler optimization is the quality of error and warning messages returned to the user. Complementary to silently modifying the source code of the program, the compiler can also indicate to the user suggestions of better ways to write the program. The research in that regard is quite limited, as a survey indicates (Becker et al. 2019). A notable exception is Pottier’s framework for displaying useful syntax error messages for LR(1) grammars (Pottier 2016; Bour and Pottier 2021). However, recent history hints at some hope for that line of work: while GCC’s error messages are famous for their obscurity, LLVM provides an incremental improvement. More recently, Rust has taken an opinionated and forward-thinking approach (Turner 2016) that centers the error message around a context in the code, enhanced with notes and suggestions.

LLVM, Dreaming the Ultimate Backend

By looking at the description of all the common compiler optimizations above, we draw several conclusions. First, all optimizations are a tradeoff between compile time and performance of the generated code. Second, the implementations of these optimizations inside modern projects like LLVM required a lot of non-automatable compiler engineer effort. This effort goes into hardcoded heuristics informed from decades of experience of compiling big, performance-sensitive codebases. While compiler optimization research is ongoing and provides a lot of theoretical insights, we claim that the sum of work put into projects like LLVM constitutes an irreplaceable infrastructure that is very unlikely to be superseded in the next decades. The machine learning community is starting to produce interesting tooling to automate these heuristics but this approach seems to be complementary to manual work rather than to provide a complete replacement (Wang and O’Boyle 2018). Projects likes LLVM or GCC are over several millions lines of code.

Another look of the list of optimization categories shows a distinction between the optimizations that are specific to an ISA, and those that are specific to the input programming language. Hence, it is important to distinguish the compiler frontend, mostly responsible for the language-specific optimizations, from the compiler backend, that mostly deals with ISA-specific optimizations.

The project that features most prominently this distinction between frontend and backend is definitely LLVM (Lattner 2002, 2008) and its entry-point, compilation-platform-grade Intermediate Representation (IR). By isolating the compiler backend and creating a diverse community of programming languages relying on it, LLVM has been able to attract a lot of compiler engineer’s time. The LLVM IR can be translated to dozens of ISAs, and is a now a reference object of study for compilation research (Grosser et al. 2011; Nötzli and Brown 2016; Zhao et al. 2013; Lee, Hur, and Lopes 2019; Yang et al. 2011).

Beyond the Clang frontend for C and C++, a lot of new programming languages now chose to emit LLVM IR instead of ISA-specific assembly to piggyback on this critical optimization infrastructure. Notably, Swift (Rebouças et al. 2016) from Apple (created in 2015) and Rust (Matsakis and Klock 2014) from Mozilla (created in 2014). It is worth noting that LLVM is not yet up to date with GCC in terms of the architecture coverage: GCC supports more legacy ISAs, and thus cannot be replaced by LLVM in a lot of real-world critical projects.

The natural question raised by this evolution becomes: is LLVM IR the ultimate compilation target for all the next statically compiled programming languages? While we cannot answer this, we make the case that LLVM hegemony is not complete, and is unlikely to ever be. Of course, the ecosystem of the Java Virtual Machine continues to attract many new programming languages such as Scala or Clojure. But even in the realm of low-level, performance-oriented languages, LLVM is no longer the only backend option.

Rust and the Case for a Multi-Backend Compiler

The case of Rust is typical. Originally written as an LLVM frontend, the Rust compiler has recently moved towards accepting a second backend: Cranelift (Alliance 2019). The reasons for this move are detailed in a blog post (Williams 2020).

First, the compile times for large Rust applications were becoming unmanageable as of 2019, and threatened the language adoption. For instance, results of the Rust survey 2019 mention that “Compiling development builds at least as fast as Go would be table stakes for us to consider Rust”. Benchmarks performed by the Rust compiler team then designated LLVM as the main culprit for slow compile times. If developers could accept waiting a long time to get a release, optimized build, these delays did not fit within a traditional write-compile-execute-debug workflow cycle. In this setting, LLVM was still taking a lot of time even though all the optimizations were disabled. The reason behind LLVM’s slow place is simply, as a compiler engineer reveals (Popov 2020), that the project never considered fast compile time as a goal, hence it did not set up until very recently the continuous integration tools that could monitor and improve this metric. Even if the complaints coming from Rust incentivized the LLVM community to act on this problem, it is unlikely that compile times will drop in the order of magnitude that is required to provide the required user experience to the developers who just want a fast debug build.

The second reason for decoupling partially the Rust compiler from LLVM concern subtle semantics mismatch. Even though LLVM IR is theoretically independent from its main frontend languages, C and C++, the semantics of the IR are closely modeled on the behavior of the C standard. This poses problems when playing around the dark corners of the standard; for instance, a Rust bug involving infinite loop and uninhabitable types (issue #28728) originated in an LLVM IR assumption that did not match the Rust semantics. This particular bug took 5 years to be fixed, as potential early fixes could cause performance regressions for a lot of Rust programs. With LLVM as the sole backend of the Rust compiler, is it tempting to match exactly the semantics of LLVM IR and thus the semantics of C/C++. However, Rust sets out a different ambition, for instance by disallowing undefined behavior in safe code.

A third reason that paves the way for alternatives to LLVM as a backend for the Rust compiler is information loss. Indeed, the Rust compiler computes the lifetimes of all program variables during its infamous borrow checking phase. The rich structural invariants that the ownership and borrowing discipline impose on Rust programs could be harvested for many compiler optimizations. Unfortunately, all of this information is erased and lost when translating to LLVM IR, since this representation does not feature a concept of lifetime. Instead, LLVM has to re-compute by itself approximate lifetime for the Rust program variables, leading to slower compilation types and missed optimization opportunities. Furthermore, the strong typing of Rust is lost to LLVM, and with it a lot of safety guarantees when interoperating with programs in other languages. The question of an interoperability scheme beyond the basic C ABI scheme is still an active research problem. Interestingly, Amhed (Ahmed 2015) proposed in 2015 to start enriching LLVM IR with typing and structure information, but this research program has yet to yield concrete results after the first theoretical proof of concept (Scherer et al. 2018).

The New Web Lingua Franca and Matchmaker

All of the above arguments make the case for adding new backends to the Rust compiler, but the main driving force of the engineering effort to make this happen has been the apparition of a unusual programming language coming from the Web ecosystem: WebAssembly. Introduced in 2017, WebAssembly (Haas et al. 2017) is a portable execution environment supported by all major browsers and Web application frameworks. It is designed to be an alternative to but interoperable with JavaScript.

WebAssembly defines a compact, portable instruction set for a stack-based machine. The language is made up of standard arithmetic, control-flow, and memory operators. The language only has four value types: floating-point and signed numbers, both 32-bit and 64-bit. Importantly, WebAssembly is typed, meaning that a well-typed WebAssembly program can be safely executed without fear of compromising the host machine (WebAssembly relies on the OS page protection mechanism to trap out-of-memory accesses). This allows applications to run independently and generally deterministically. WebAssembly applications also enjoy superior performance, since WebAssembly instructions can typically be mapped directly to platform-specific assembly.

Interaction with the rest of the environment, e.g. the browser or a JavaScript application, is done via an import mechanism, wherein each WebAssembly module declares a set of imports whose symbols are resolved when the compiled WebAssembly code is dynamically loaded into the browser. As such, WebAssembly is completely platform-agnostic (it is portable) but also Web-agnostic (there is no mention of the Document Object Model or the Web in the specification).

LLVM took the early lead in targeting WebAssembly as a compilation target by extending its existing Javascript backend, Emscripten (Zakai 2011). The first applications of WebAssembly using Emscripten were mobile games using the Unity engine (Trivellato 2018), whose entire C++ codebase could be compiled in a sizeable WebAssembly binary that would run in the browser. At this point, the WebAssembly story meets our Rust and Cranelift thread: in Chrome and Firefox, WebAssembly JIT compilation had historically been performed by the existing Javascript optimizing JIR compilers. To outperform Chrome, Mozilla, the company behind Firefox, launched the Cranelift project: an optimizing JIT compiler for WebAssembly, written in Rust, and centered around a single intermediate representation, Cranelift IR. Quickly, Cranelift was noticed by the Rust compiler engineers, because of the instinctive appeal to completely bootstrap Rust with a Cranelift backend written in Rust. Moreover, the focus on fast compilation time at the center of the Cranelift project was a perfect fit for the Rust community whose complaint against LLVM slow compile times was at its peak.

Over the years, Cranelift quickly gained two distinct but complementary uses. First, as a WebAssembly JIT compiler, inside the browser or critical server infrastructure used in Content Delivery Networks (CDN). Indeed, CDNs have to deploy in real time multiple configuration and serving-relating software on machine with heterogeneous architectures (chosen dynamically by optimizing cloud costs). Some CDN companies like Fastly (Hickey 2019) have invested heavily in WebAssembly as an ISA-independent language in which to encode their software to be deployed in real time. Second use of Cranelift: as a fast backend alternative for Rust programmers that want to enjoy a seamless write-compile-execute-debug loop during their development experience.

Lessons From a Past Life

The author of this dissertation played a humble part in those developments, as a compiler engineer at Mozilla during two consecutive internships. First, I wrote the early version of the WebAssembly frontend for Cranelift. Then, I helped refactor the backend of the Rust compiler to later welcome the Cranelift backend. These experiences shaped my understanding of this complex programming languages background, and how the different pieces of compiler infrastructure can be pieced together to meet the evolving needs of the programmers. Hence, the conclusions of this background subsection are as follows.

First, compilers are the receptacle of the time engineers spend to optimize them; the recipe for a successful compiler is to attract as much well-funded organizations and users as possible to maximize the amount of manpower that goes into fine-tuning the various heuristics and complex algorithms necessary to generate high-quality output code. As such, mature projects like LLVM or GCC will basically last forever and should be reused in modern developments to ensure interoperability and production-ready user experience.

Second, and to balance the first point, it is unlikely that one compiler framework will ever become hegemonic. The design space is too big, and foundational technical choices too deep, for a project to cover all the areas deemed with compiler success: compile time, performance of the generated code, safety guarantees, backend and frontend agility, etc. Hence, there are only opinionated takes that need to interoperate to avoid splitting the software community into isolated silos.

Third, as a consequence of the second point, translations are critical to navigate trough this fragmented language ecosystem. The term translation encompasses what is called transpilation, meaning a translation from source code to source code in high-level languages, but can also designate an internal compiler pass between two intermediate representations. Nevertheless, translations become leaky abstractions whenever there is a semantic mismatch. The difficulty of connecting two languages or representations for all possible programs is the main motivation of compiler certification, pioneered by Leroy (Leroy 2006). However, the daunting size of certification proofs and the complexity of those theorems have prevented the widespread adoption of those formal methods.

Equipped with this line of thinking, we now move from the engineer-friendly, compiler world to the research-heavy, formal methods world and the sub-field that encompasses our work: program verification.

For the rest of the story, head to Chapter 1 of my PhD dissertation!

References

Ahmed, Amal. 2015. “Verified Compilers for a Multi-Language World.” In 1st Summit on Advances in Programming Languages (Snapl 2015). Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik.

Alliance, The Bytecode. 2019. Cranelift. https://github.com/bytecodealliance/wasmtime/tree/main/cranelift.

Becker, Brett A, Paul Denny, Raymond Pettit, Durell Bouchard, Dennis J Bouvier, Brian Harrington, Amir Kamil, et al. 2019. “Compiler Error Messages Considered Unhelpful: The Landscape of Text-Based Programming Error Message Research.” In Proceedings of the Working Group Reports on Innovation and Technology in Computer Science Education, 177–210.

Benabderrahmane, Mohamed-Walid, Louis-Noël Pouchet, Albert Cohen, and Cédric Bastoul. 2010. “The Polyhedral Model Is More Widely Applicable Than You Think.” In International Conference on Compiler Construction, 283–303. Springer.

Blindell, G.H. 2016. Instruction Selection: Principles, Methods, and Applications. https://doi.org/10.1007/978-3-319-34019-7.

Bour, Frédéric, and François Pottier. 2021. “Faster Reachability Analysis for LR (1) Parsers.” In Conference on Software Language Engineering.

Briggs, Preston, and Keith D Cooper. 1994. “Effective Partial Redundancy Elimination.” ACM SIGPLAN Notices 29 (6): 159–70.

Bucher, Brandt, and Guido van Rossum. 2020. “PEP634: Structural Pattern Matching.” https://www.python.org/dev/peps/pep-0634/.

Carr, Steve, Kathryn S McKinley, and Chau-Wen Tseng. 1994. “Compiler Optimizations for Improving Data Locality.” ACM SIGPLAN Notices 29 (11): 252–62.

Chaitin, Gregory J, Marc A Auslander, Ashok K Chandra, John Cocke, Martin E Hopkins, and Peter W Markstein. 1981. “Register Allocation via Coloring.” Computer Languages 6 (1): 47–57.

Click, Cliff. 1995. “Global Code Motion/Global Value Numbering.” In Proceedings of the Acm Sigplan 1995 Conference on Programming Language Design and Implementation, 246–57.

Cocke, John. 1970. “Global Common Subexpression Elimination.” In Proceedings of a Symposium on Compiler Optimization, 20–24.

Das, Manuvir, Ben Liblit, Manuel Fähndrich, and Jakob Rehof. 2001. “Estimating the Impact of Scalable Pointer Analysis on Optimization.” In International Static Analysis Symposium, 260–78. Springer.

Demange, Delphine, Yon Fernández de Retana, and David Pichardie. 2018. “Semantic Reasoning About the Sea of Nodes.” In Proceedings of the 27th International Conference on Compiler Construction, 163–73.

Ebner, Dietmar, Florian Brandner, Bernhard Scholz, Andreas Krall, Peter Wiedermann, and Albrecht Kadlec. 2008. “Generalized Instruction Selection Using SSA-Graphs.” In Proceedings of the 2008 Acm Sigplan-Sigbed Conference on Languages, Compilers, and Tools for Embedded Systems, 31–40.

Ghiya, Rakesh, and Laurie J. Hendren. 1998. “Putting Pointer Analysis to Work.” In Proceedings of the 25th Acm Sigplan-Sigact Symposium on Principles of Programming Languages, 121–33. POPL ‘98. New York, NY, USA: Association for Computing Machinery. https://doi.org/10.1145/268946.268957.

Grosser, Tobias, Hongbin Zheng, Raghesh Aloor, Andreas Simbürger, Armin Größlinger, and Louis-Noël Pouchet. 2011. “Polly-Polyhedral Optimization in LLVM.” In Proceedings of the First International Workshop on Polyhedral Compilation Techniques (Impact), 2011:1.

Haas, Andreas, Andreas Rossberg, Derek L. Schuff, Ben L. Titzer, Michael Holman, Dan Gohman, Luke Wagner, Alon Zakai, and JF Bastien. 2017. “Bringing the Web up to Speed with WebAssembly.” In ACM Sigplan Conference on Programming Language Design and Implementation (Pldi), 185–200.

Hickey, Pat. 2019. “Announcing Lucet: Fastly’s Native Webassembly Compiler and Runtime.” https://www.fastly.com/blog/announcing-lucet-fastly-native-webassembly-compiler-runtime.

Hohenauer, Manuel, Felix Engel, Rainer Leupers, Gerd Ascheid, and Heinrich Meyr. 2009. “A Simd Optimization Framework for Retargetable Compilers.” ACM Transactions on Architecture and Code Optimization (TACO) 6 (1): 1–27.

Järvi, Jaakko, and John Freeman. 2010. “C++ Lambda Expressions and Closures.” Science of Computer Programming 75 (9): 762–72.

Kang, Jeehoon, Chung-Kil Hur, Ori Lahav, Viktor Vafeiadis, and Derek Dreyer. 2017. “A Promising Semantics for Relaxed-Memory Concurrency.” ACM SIGPLAN Notices 52 (1): 175–89.

Knoop, Jens, Oliver Rüthing, and Bernhard Steffen. 1994. “Partial Dead Code Elimination.” ACM SIGPLAN Notices 29 (6): 147–58.

Lattner, Chris. 2008. “LLVM and Clang: Next Generation Compiler Technology.” In The Bsd Conference. Vol. 5.

Lattner, Chris Arthur. 2002. “LLVM: An Infrastructure for Multi-Stage Optimization.” PhD thesis, University of Illinois at Urbana-Champaign.

Lee, Juneyoung, Chung-Kil Hur, and Nuno P Lopes. 2019. “AliveInLean: A Verified LLVM Peephole Optimization Verifier.” In International Conference on Computer Aided Verification, 445–55. Springer.

Leroy, Xavier. 2006. “Formal Certification of a Compiler Back-End or: Programming a Compiler with a Proof Assistant.” SIGPLAN Not. 41 (1): 42–54.

Matsakis, Nicholas D, and Felix S Klock. 2014. “The Rust Language.” ACM SIGAda Ada Letters 34 (3): 103–4.

Mazinanian, Davood, Ameya Ketkar, Nikolaos Tsantalis, and Danny Dig.

- “Understanding the Use of Lambda Expressions in Java.” Proceedings of the ACM on Programming Languages 1 (OOPSLA): 1–31.

Nötzli, Andres, and Fraser Brown. 2016. “LifeJacket: Verifying Precise Floating-Point Optimizations in LLVM.” In Proceedings of the 5th Acm Sigplan International Workshop on State of the Art in Program Analysis, 24–29.

Pike, Geoffrey Roeder. 2002. Reordering and Storage Optimizations for Scientific Programs. University of California, Berkeley.

Poletto, Massimiliano, and Vivek Sarkar. 1999. “Linear Scan Register Allocation.” ACM Transactions on Programming Languages and Systems (TOPLAS) 21 (5): 895–913.

Popov, Nikita. 2020. “Make LLVM Fast Again.” https://www.npopov.com/2020/05/10/Make-LLVM-fast-again.html.

Pottier, François. 2016. “Reachability and Error Diagnosis in LR (1) Parsers.” In Proceedings of the 25th International Conference on Compiler Construction, 88–98.

Rebouças, Marcel, Gustavo Pinto, Felipe Ebert, Weslley Torres, Alexander Serebrenik, and Fernando Castor. 2016. “An Empirical Study on the Usage of the Swift Programming Language.” In 2016 Ieee 23rd International Conference on Software Analysis, Evolution, and Reengineering (Saner), 1:634–38. IEEE.

Scherer, Gabriel, Max New, Nick Rioux, and Amal Ahmed. 2018. “FabULous Interoperability for ML and a Linear Language.” In International Conference on Foundations of Software Science and Computation Structures, 146–62. Springer, Cham.

Trivellato, Marco. 2018. “WebAssembly Is Here!” https://blog.unity.com/technology/webassembly-is-here.

Turner, Jonathan. 2016. “Shape of Errors to Come.” https://blog.rust-lang.org/2016/08/10/Shape-of-errors-to-come.html.

Wang, Zheng, and Michael O’Boyle. 2018. “Machine Learning in Compiler Optimization.” Proceedings of the IEEE 106 (11): 1879–1901.

Williams, Jason. 2020. “A Possible New Backend for Rust.” https://jason-williams.co.uk/a-possible-new-backend-for-rust.

Winograd, Terry. 1979. “Beyond Programming Languages.” Communications of the ACM 22 (7): 391–401.

Yang, Xuejun, Yang Chen, Eric Eide, and John Regehr. 2011. “Finding and Understanding Bugs in C Compilers.” In Proceedings of the 32nd Acm Sigplan Conference on Programming Language Design and Implementation, 283–94.

Zakai, Alon. 2011. “Emscripten: An LLVM-to-Javscript Compiler.” In Proceedings of the Acm International Conference Companion on Object Oriented Programming Systems Languages and Applications Companion, 301–12.

Zhao, Jianzhou, Santosh Nagarakatte, Milo MK Martin, and Steve Zdancewic. 2013. “Formal Verification of SSA-Based Optimizations for LLVM.” In Proceedings of the 34th Acm Sigplan Conference on Programming Language Design and Implementation, 175–86.